Logistic Regression

Getting started with Logistic Regression theory

Introduction

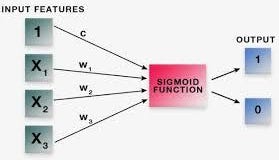

Logistic Regression is a Supervised learning algorithm widely used for classification. It is used to predict a binary outcome (1/ 0, Yes/ No, True/ False) given a set of independent variables. To represent binary/ categorical outcome, we use dummy variables.

Logistic regression uses an equation as the representation, very much like linear regression. It is not much different from linear regression, except that a Sigmoid function is being fit in the equation of linear regression.

Simple Linear and Multiple Linear Regression Equation:

y = b0 + b1x1 + b2x2 + ... + e

Sigmoid function :

p = 1 / (1 + e ^ -(y))

Logistic Regression Equation :

p = 1 / (1 + e ^ -(b0 + b1x1 + b2x2 +... + e))

where, p is the probability of outcome

y is the predicted output

b0 is the bias or intercept term

Each column in your input data has an associated b coefficient (a constant real value) that must be learned from your training data.

Difference between Linear Regression and Logistic Regression :

- In Linear Regression the target is an continuous (real value) variable while in Logistic Regression the target is a discrete (binary or ordinal) variable.

- The Predicted values in case of Linear Regression are the mean of the target variable at the given values of the input variables. While the Predicted values in Logistic regression are the probability of a particular level(s) of the target variable at the given values of the input variables.

Types of Logistic Regression:

- Binary Logistic Regression: The target variable has only two 2 possible outcomes. For example, classifying e-mails as Spam or not Spam.

- Multinomial Logistic Regression: The target variable has three or more categories without ordering. For example, predicting which food is preferred more (Veg, Non-Veg, Vegan)

- Ordinal Logistic Regression: The target variable has three or more categories with ordering. For example, rating for a movie rating from 1 to 5.

Decision Boundary

To predict which class a data belongs, a threshold can be set. Based upon this threshold, the obtained estimated probability is classified into classes. This threshold is called the Decision Boundary.

Say, if predicted_value ≥ 0.5, then classify email as spam else as not spam. Decision boundary can be linear or non-linear. Polynomial order can be increased to get complex decision boundary.

Advantages of Linear Regression :

- It makes no assumptions about distributions of classes in feature space.

- Easily extended to multiple classes (multinomial regression).

- Natural probabilistic view of class predictions.

- Quick to train and very fast at classifying unknown records.

- Good accuracy for many simple data sets.

- Resistant to overfitting.

Disadvantages of Logistic Regression :

- It cannot handle continuous variables.

- If independent variables are not correlated with the target variable, then Logistic regression does not work.

- Requires large sample size for stable results.

Logistic Regression Assumptions :

- Binary logistic regression requires the dependent variable to be binary.

- Dependent variables are not measured on a ratio scale.

- Only the meaningful variables should be included.

- The independent variables should be independent of each other. That is, the model should have little or no multi-collinearity.

- Logistic regression requires quite large sample sizes.